OpenAI's new release is human-like, faster, and... free.

Yesterday's announcement is a leap forward in AI with emotion recognition, enhanced interaction, and lower costs.

If you would like exposure to the biggest opportunities at the intersection of Generative-AI, Virtual Reality, Gaming and Web3, reply to this email to learn more about our upcoming Fund II or meet us in person at SALT NYC.

In yesterday’s livestream, OpenAI introduced GPT-4o, which combines audio, vision, and text to provide a unique human-computer interaction experience. But there's more. Let's explore ten highlights from the announcement and what is about to be deployed for all ChatGPT users.

1/ The new multimodal model will roll out for free over the next few weeks. It boasts response times akin to human conversational speeds - 232 to 320 milliseconds. This positions it significantly ahead of earlier models in terms of responsiveness.

2/ The model isn't just faster; it's also economically efficient. GPT-4o operates at half the cost and twice the speed of its predecessor, GPT-4 Turbo.

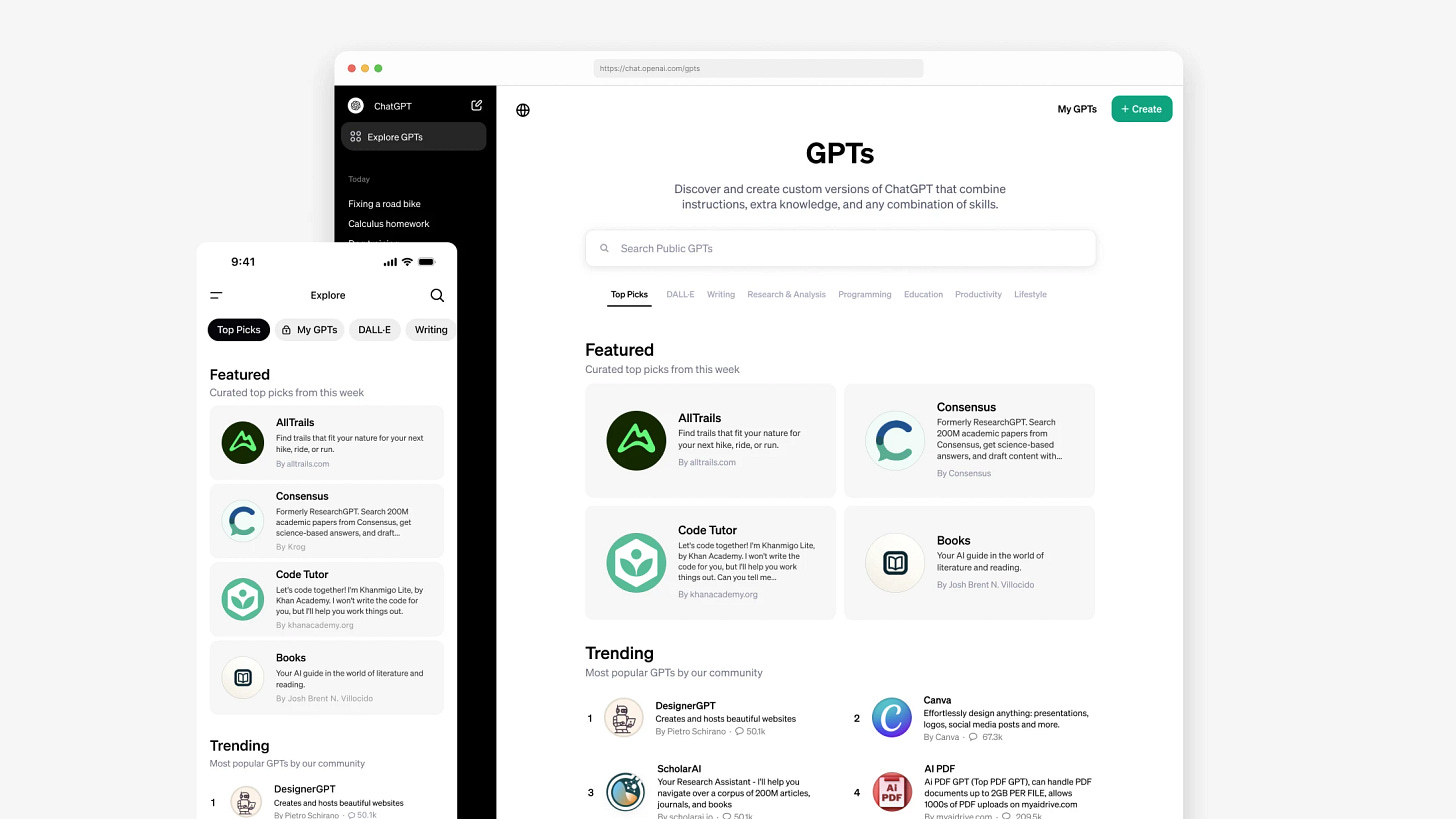

3/ ChatGPT-4, GPTs, and the new desktop app will soon be accessible to all users. This change will open up the GPT store to a market of over 100m potential users.

4/ OpenAI has significantly improved GPT-4o's capabilities in non-English languages, handling 50 languages with improved speed and quality. Voice Mode in ChatGPT involved multiple models and longer latencies. The new model simplifies this into a single, more effective pipeline, enhancing the quality of interaction by preserving nuances like tone and emotion.

5/ Beyond tech specs, OpenAI showcased GPT-4o’s versatility through live demos that included singing duets and playing Rock Paper Scissors, illustrating its potential to transform entertainment and education.

6/ Math with Salman Khan, the founder of Khan Academy, and his son.

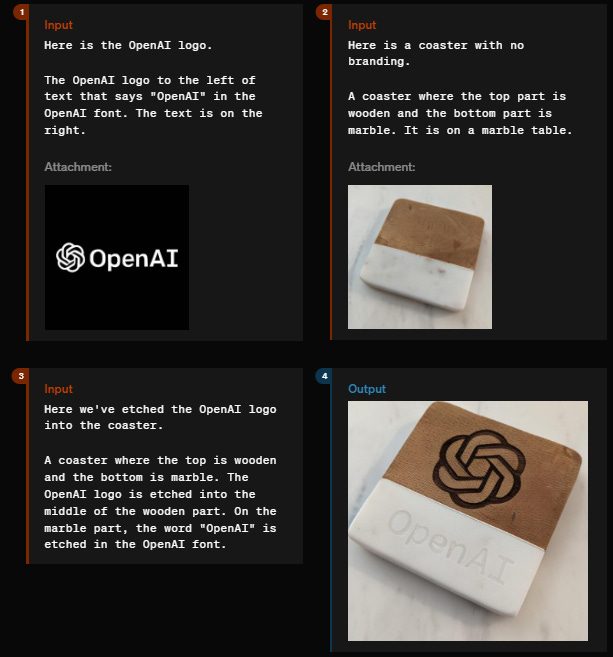

7/ New text-to-image capabilities. Significantly better text in images created (e.g., handwriting on a page).

8/ Character continuity across image generation.

9/ Text-to-3D capabilities: This image demonstrates GPT-4os' new 3D object synthesis tool. The tool generates and compiles multiple views of a 3D OpenAI logo, creating detailed reconstructions from textual descriptions, as seen in the final output.

10/ Text to Sound: GPT-4o's sound synthesis capability allows it to create highly realistic audio from textual descriptions. This can be particularly beneficial in creating dynamic content for digital media and immersive environments where audio cues are critical to the user's experience.

In other exciting news, Apple and OpenAI are apparently negotiating a contract to integrate ChatGPT into iOS 18, which may greatly boost iPhone innovation and sales despite current market slowdowns. This partnership demonstrates OpenAI's impact on the technology industry and establishes ChatGPT as a leading AI service on global platforms.

Disclaimers:

This is not an offering. This is not financial advice. Always do your own research.

Our discussion may include predictions, estimates or other information that might be considered forward-looking. While these forward-looking statements represent our current judgment on what the future holds, they are subject to risks and uncertainties that could cause actual results to differ materially. You are cautioned not to place undue reliance on these forward-looking statements, which reflect our opinions only as of the date of this presentation. Please keep in mind that we are not obligating ourselves to revise or publicly release the results of any revision to these forward-looking statements in light of new information or future events.